Table of Contents

A Paradigm Shift in AI Image Generation: Bye-Bye to Generators?

The field of AI image generation has been nothing short of a revolution, allowing us to create stunning visuals from simple text prompts. However, this technology has always relied on complex, computationally intensive “generator” models that require weeks or even months of training on massive datasets. The financial and environmental costs are significant. But what if there was a way to bypass the generator entirely? Researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Laboratory for Information and Decision Systems (LIDS) have recently unveiled a groundbreaking method that does just that. They discovered that a special type of neural network, known as a tokenizer, can be used for both image generation and editing, eliminating the need for a separate generator and fundamentally changing how we approach AI-driven visual media.

The Problem with Current AI Image Generators

Most of the popular AI image generators we use today, like Midjourney or Stable Diffusion, operate on a two-part system. First, a tokenizer compresses and encodes visual data into a simplified, numerical format. Then, a powerful generator model takes these encoded “tokens” and uses them to construct new images. This generator component is the bottleneck. It’s huge, slow to train, and requires immense computational power. The MIT team’s discovery challenges this entire premise by showing that the tokenizer itself holds more power than previously understood.

The traditional workflow for creating and modifying images with AI has long been a resource-heavy process. A single high-quality image can take a sophisticated model several seconds, and a developer wanting to train a new model from scratch faces an enormous investment in time and hardware. This has created a high barrier to entry, centralizing much of the innovation in the hands of a few large companies with vast data centers. The new method seeks to dismantle this structure by offering a leaner, more efficient alternative. The core idea is that instead of training a massive generator to interpret and create images from scratch, we can leverage the inherent compression capabilities of a tokenizer and manipulate the compressed data directly to achieve the desired results. This shift from a generative process to a manipulative one is the key innovation, and it has the potential to make AI image tools more accessible and democratized than ever before.

How the New MIT Method Works: A Deep Dive into 1D Tokenizers

The heart of this new approach lies in a specific type of neural network called a 1D tokenizer. While traditional tokenizers often represent images in a two-dimensional grid, this new model translates a 256×256 pixel image into a compact sequence of just 32 numbers, or tokens. This is an incredible level of compression that allows for a more holistic understanding of the image data. To put this into perspective, a typical image contains tens of thousands of pixels, each with its own color and brightness data. The 1D tokenizer can distill all of that information down to a mere 32 tokens, each of which is a 12-digit number of 1s and 0s. This creates a kind of “hidden language” that the computer uses to represent and communicate about images.

Unlocking the Power of Tokens

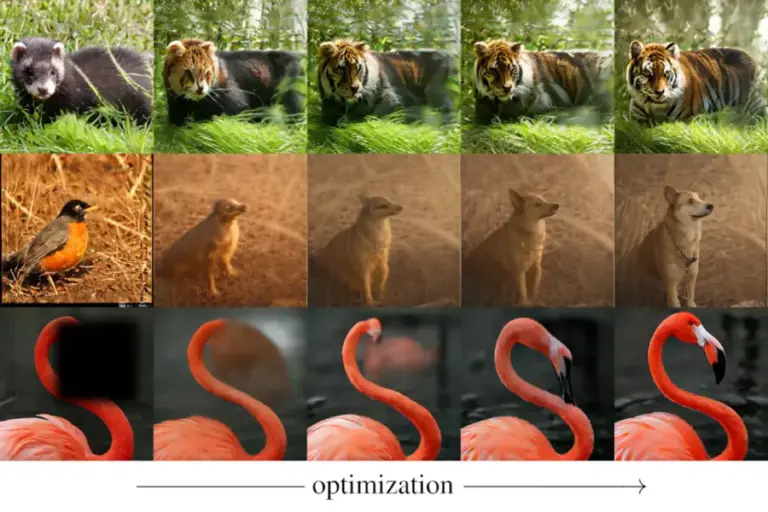

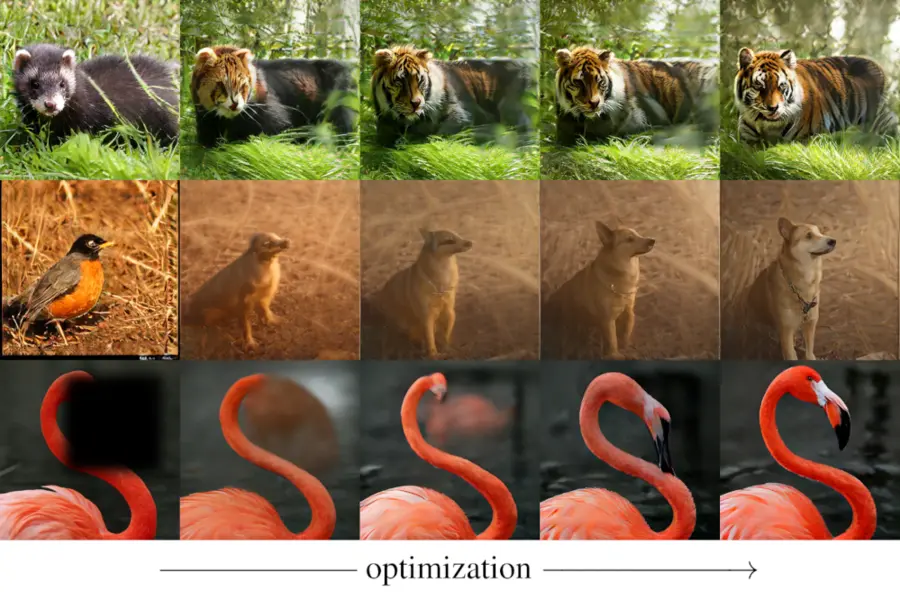

One of the key insights from the research was the discovery that individual tokens are not just random data points. They are, in fact, meaningful representations of the image’s attributes. By manipulating these tokens—adding, removing, or replacing them—researchers found they could precisely control various aspects of an image, such as its blurriness, brightness, and even the pose of an object within it. For example, changing a single token could alter the direction a bird’s head is facing. This level of granular control is a game-changer because it allows for direct editing without the need for a separate generative process. The team found that specific tokens corresponded to specific visual elements. This is a crucial finding because it means that we can now interact with the fundamental building blocks of an image in a way that was previously only possible by retraining a massive generator model. This direct manipulation is far more efficient and gives users unprecedented control over the final output.

The method also uses a “detokenizer” (or decoder), which is a much simpler model than a full-blown generator. Its job is simply to reconstruct the image from the manipulated tokens. By combining the 1D tokenizer with a detokenizer and leveraging an off-the-shelf model like CLIP, which measures how well an image matches a text prompt, the MIT team created a system capable of performing a wide range of tasks—all without a generator. This modular and lightweight approach not only reduces computational costs but also makes the entire process more flexible and easier to adapt for different applications. The core of this research is a testament to the power of understanding the underlying mechanics of AI, rather than just treating it as a black box.

| Feature | Traditional AI Image Generation | New MIT Method |

|---|---|---|

| Core Components | Tokenizer + Large Generator | 1D Tokenizer + Detokenizer |

| Training Cost | High (weeks/months) | Significantly Lower |

| Editing Method | Iterative re-generation | Direct token manipulation |

| Efficiency | Computationally demanding | Much more efficient |

Practical Applications of This Breakthrough Technology

The potential applications of this new method are vast and exciting, offering a more efficient and accessible way to work with AI-generated images. One of the most immediate benefits is the ability to perform “inpainting,” which means filling in missing parts of an image. If you have a damaged or incomplete photo, this technology can intelligently reconstruct the missing sections, a task that was previously challenging and resource-intensive. Imagine being able to restore old family photos or fill in gaps in a landscape image with unprecedented accuracy and speed. This capability is not just for restoration; it can also be used creatively to remove unwanted objects from a photo and have the AI intelligently fill in the background, a task that would normally require a skilled graphic designer and a lot of time.

Streamlined Image Conversion and Editing

Beyond inpainting, the new method excels at image-to-image conversion. The researchers demonstrated how an image of a red panda could be seamlessly transformed into a tiger, or how a real-world photo could be converted into a painting, all by simply manipulating the underlying tokens. This opens up a new realm of creative possibilities for artists and designers, allowing for quick stylistic changes and conversions without the need for complex software or lengthy processing times. This is also significant because it dramatically lowers the barrier to entry for developers and creators who may not have access to the extensive computational resources needed for training large generator models. For content creators, this means a faster workflow for creating marketing materials, social media graphics, or even conceptual art. The ability to directly manipulate the core essence of an image through its tokens provides a level of fine-tuning that is a huge step forward for the industry.

Key Takeaway: The new MIT method not only makes AI image generation more efficient but also provides a level of direct control and flexibility that was previously unattainable, opening up new possibilities for creative and technical applications.

What This Means for the Future of AI and Creativity

This breakthrough from MIT is a testament to the ongoing evolution of artificial intelligence. It shows that sometimes, the most significant leaps forward come from rethinking existing components rather than simply building bigger and more complex models. By proving that tokenizers are more than just data compressors, the researchers have laid the groundwork for a new generation of AI tools that are more efficient, more accessible, and more versatile. It challenges the conventional wisdom that bigger is always better in AI and points toward a future where efficiency and clever design are prioritized.

A Shift Towards Accessible AI

The high computational cost of training generator models has long been a barrier to entry for many researchers and developers. This new method could democratize AI image generation, allowing smaller teams and even individual creators to build powerful tools without needing a supercomputer. This shift could lead to an explosion of innovation, with more people experimenting and creating new applications for this technology. The future of AI image generation might not be about building a bigger engine, but rather about learning to use the existing parts in smarter, more creative ways게이밍 헤드셋 추천. It’s an exciting time to be in the field, and this discovery is a strong hint of what’s to come. This approach could also lead to more sustainable AI development, as the reduced computational load would mean a smaller carbon footprint associated with training and running these models. This is an important consideration as AI technology becomes more ubiquitous.

The implications of this technology extend beyond just image generation and editing. The ability to represent complex visual information with a highly compressed, meaningful set of tokens could have applications in other areas of computer vision, such as object recognition, video analysis, and even augmented reality. For instance, a system that understands the core attributes of an image through its tokens could perform tasks like scene recognition or anomaly detection with greater efficiency. The discovery is not just a new tool; it’s a new way of thinking about how AI processes and understands the visual world, and it’s a step toward a future where AI is less of a black box and more of a predictable, controllable partner in creative and technical endeavors.

Conclusion: A New Era of Efficiency and Control

MIT’s latest research marks a significant milestone in the world of AI image generation. By leveraging the power of 1D tokenizers, researchers have demonstrated a method to edit and generate images without the need for the large, resource-hungry generators that have defined the field until now. This innovation not only drastically reduces computational costs but also provides a level of direct, precise control over image attributes that was previously unimaginable. From simple inpainting to complex image conversions, this technology has the potential to streamline workflows, democratize access to powerful AI tools, and inspire a new wave of creativity. As this research develops, we can expect to see a new era of AI image tools that are faster, more efficient, and more finely tuned to the needs of their users. This is not just an incremental improvement; it is a fundamental re-imagining of the entire process, and it holds the promise of a future where AI image manipulation is more accessible and more powerful for everyone.